publications

2025

- Guiding Reinforcement Learning with Selective Vision-Language Model SupervisionMatteo Merler, Giovanni Bonetta, and Bernardo MagniniIn ECAI 2025 Workshop on AI-based Planning for Complex Real-World Applications (CAIPI’25), Oct 2025

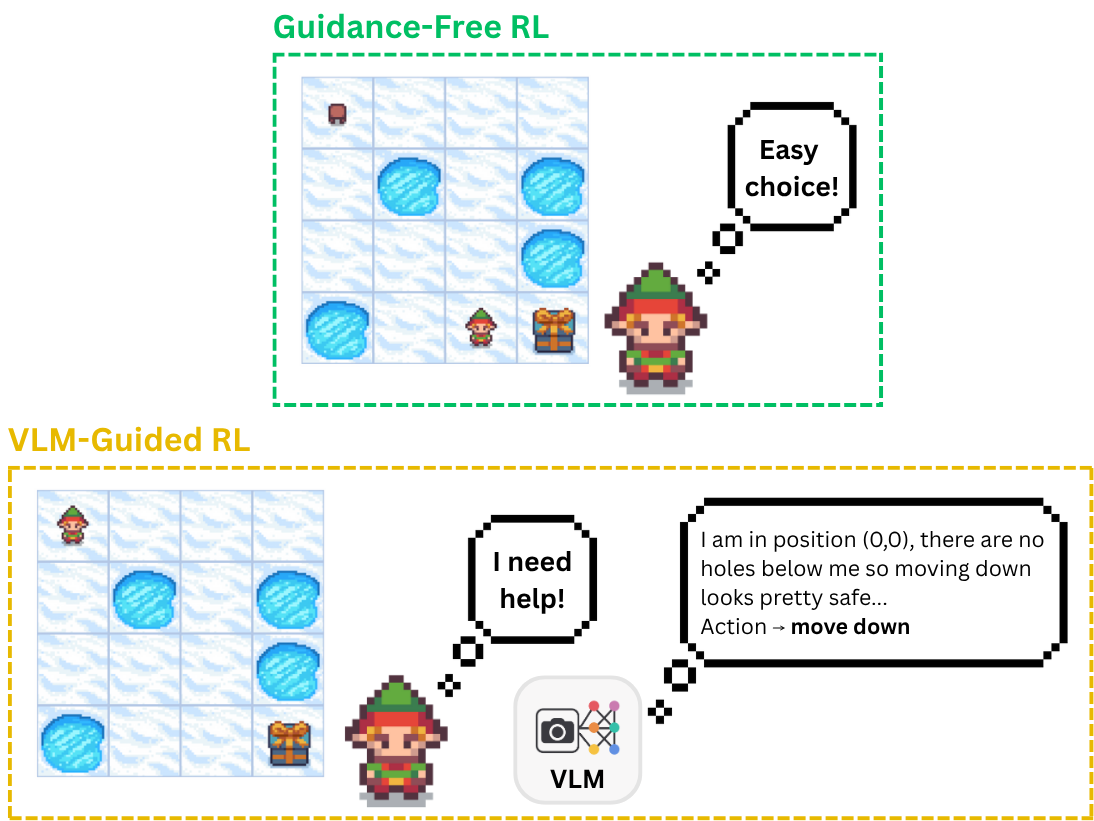

We propose a framework that augments a model-free Reinforcement Learning (RL) agent with selective guidance from a pre-trained Vision-Language Model (VLM). Our system is designed to assist the RL agent, which starts from scratch and has no prior notion of the environment, by leveraging the VLM’s common-sense knowledge to support its decision making. Rather than relying on the VLM at every timestep, the agent monitors its own uncertainty during training and defers to the VLM only when it is unsure about which action to take. Uncertainty is measured using the entropy of the policy distribution, and guidance is triggered when this entropy exceeds a predefined threshold. To reduce computational overhead, we introduce a stochastic gating mechanism that limits the frequency of VLM queries, along with a cache that stores past VLM responses for reuse. Experiments show that our method leads to more stable learning dynamics compared to standard PPO, with reduced variance across runs. In the \textttFrozenLake environment, we observe that VLM guidance is primarily utilized during the early stages of training, gradually diminishing as the agent becomes more confident. This suggests that our selective guidance mechanism can support early exploration without hindering long-term autonomous behavior.

@inproceedings{merler2025guiding, title = {Guiding Reinforcement Learning with Selective Vision-Language Model Supervision}, author = {Merler, Matteo and Bonetta, Giovanni and Magnini, Bernardo}, booktitle = {ECAI 2025 Workshop on AI-based Planning for Complex Real-World Applications (CAIPI'25)}, editor = {Niggemann, Oliver and Biswas, Gautam and Micheli, Andrea and Heesch, René and Diedrich, Alexander and Ehrhardt, Jonas and Widulle, Niklas}, publisher = {CEUR Workshop Proceedings}, pages = {39--51}, year = {2025}, month = oct, address = {Bologna, Italy}, } - ViPlan: A Benchmark for Visual Planning with Symbolic Predicates and Vision-Language ModelsMatteo Merler*, Nicola Dainese*, Minttu Alakuijala, Giovanni Bonetta, Pietro Ferrazzi, Yu Tian, Bernardo Magnini, and Pekka MarttinenMay 2025

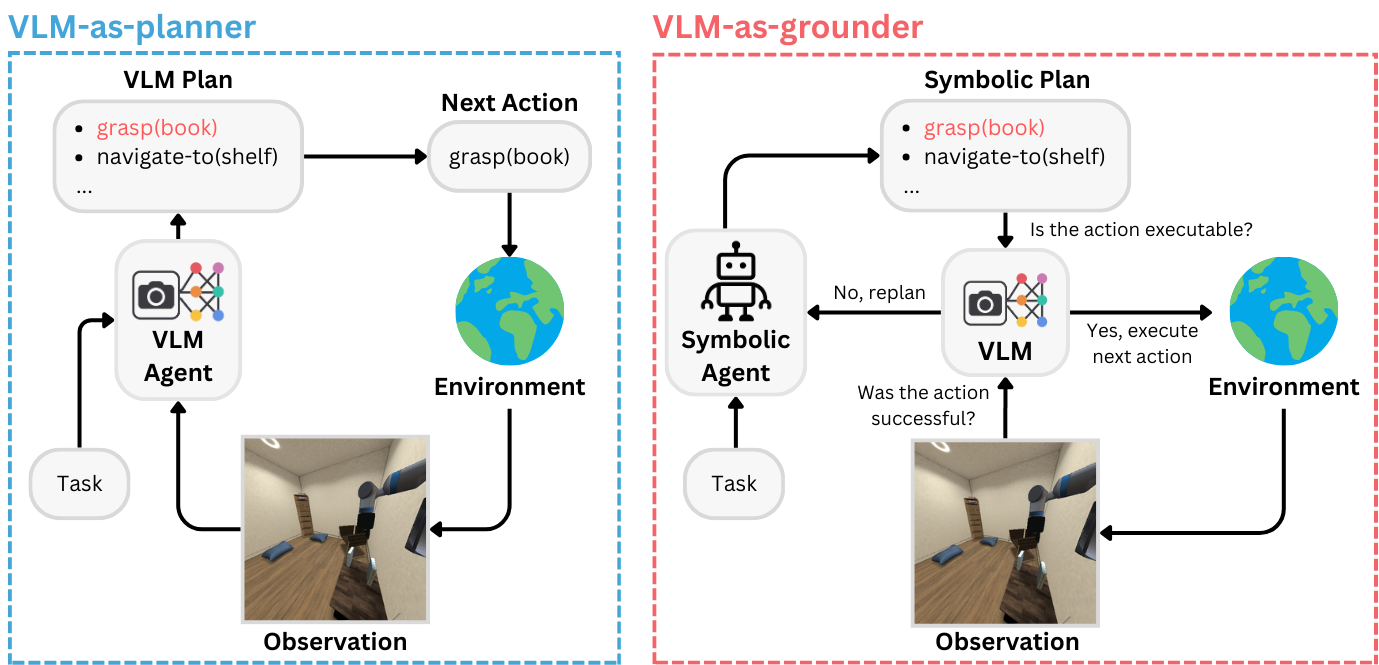

Integrating Large Language Models with symbolic planners is a promising direction for obtaining verifiable and grounded plans compared to planning in natural language, with recent works extending this idea to visual domains using Vision-Language Models (VLMs). However, rigorous comparison between VLM-grounded symbolic approaches and methods that plan directly with a VLM has been hindered by a lack of common environments, evaluation protocols and model coverage. We introduce ViPlan, the first open-source benchmark for Visual Planning with symbolic predicates and VLMs. ViPlan features a series of increasingly challenging tasks in two domains: a visual variant of the classic Blocksworld planning problem and a simulated household robotics environment. We benchmark nine open-source VLM families across multiple sizes, along with selected closed models, evaluating both VLM-grounded symbolic planning and using the models directly to propose actions. We find symbolic planning to outperform direct VLM planning in Blocksworld, where accurate image grounding is crucial, whereas the opposite is true in the household robotics tasks, where commonsense knowledge and the ability to recover from errors are beneficial. Finally, we show that across most models and methods, there is no significant benefit to using Chain-of-Thought prompting, suggesting that current VLMs still struggle with visual reasoning.

@misc{merler2025viplanbenchmarkvisualplanning, title = {ViPlan: A Benchmark for Visual Planning with Symbolic Predicates and Vision-Language Models}, author = {Merler, Matteo and Dainese, Nicola and Alakuijala, Minttu and Bonetta, Giovanni and Ferrazzi, Pietro and Tian, Yu and Magnini, Bernardo and Marttinen, Pekka}, year = {2025}, month = may, eprint = {2505.13180}, archiveprefix = {arXiv}, primaryclass = {cs.AI}, url = {https://arxiv.org/abs/2505.13180}, }

2024

- Generating Code World Models with Large Language Models Guided by Monte Carlo Tree SearchIn Advances in Neural Information Processing Systems, May 2024

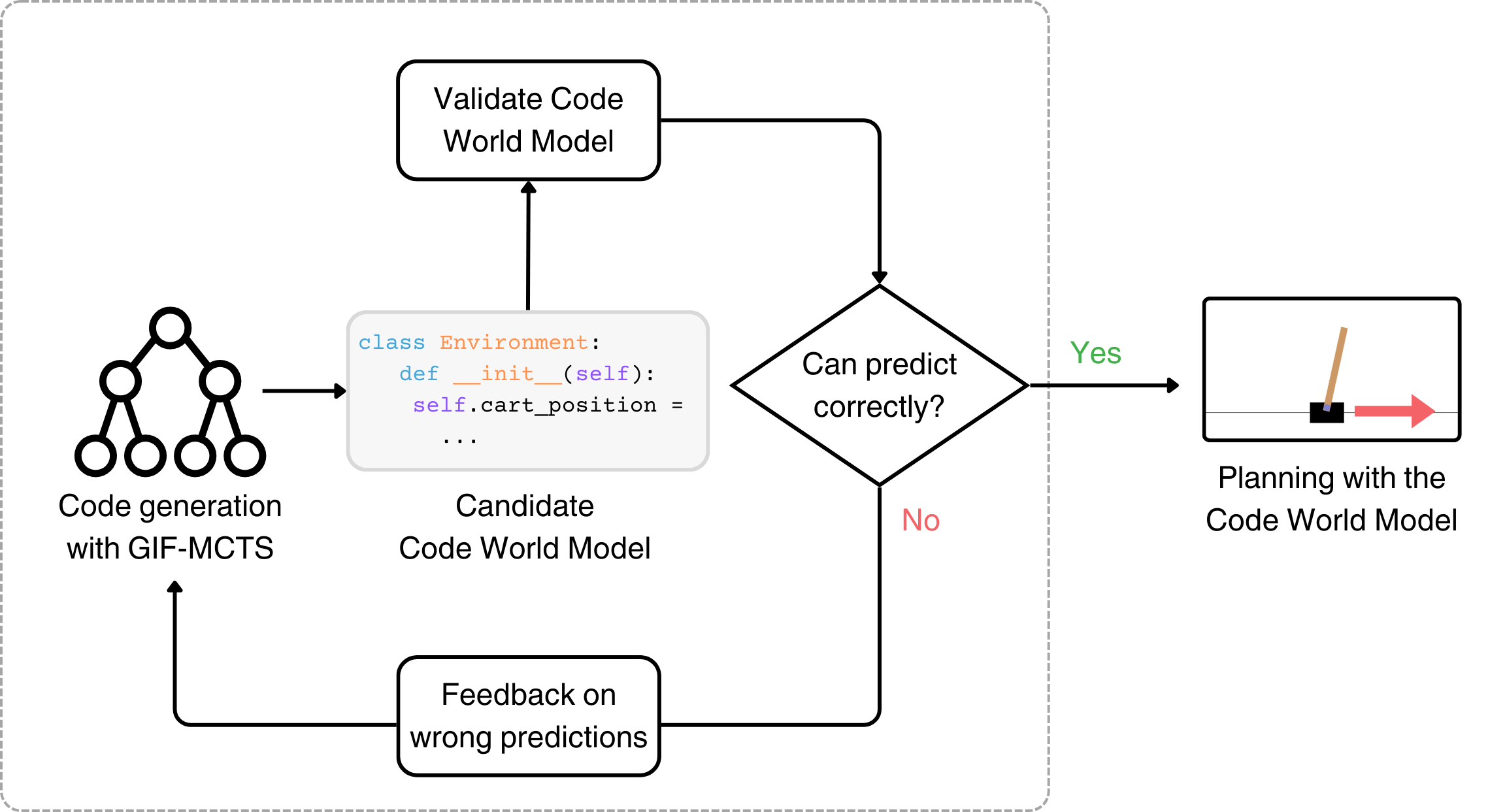

In this work we consider Code World Models, world models generated by a Large Language Model (LLM) in the form of Python code for model-based Reinforcement Learning (RL). Calling code instead of LLMs for planning has potential to be more precise, reliable, interpretable, and extremely efficient. However, writing appropriate Code World Models requires the ability to understand complex instructions, to generate exact code with non-trivial logic and to self-debug a long program with feedback from unit tests and environment trajectories. To address these challenges, we propose Generate, Improve and Fix with Monte Carlo Tree Search (GIF-MCTS), a new code generation strategy for LLMs. To test our approach in an offline RL setting, we introduce the Code World Models Benchmark (CWMB), a suite of program synthesis and planning tasks comprised of 18 diverse RL environments paired with corresponding textual descriptions and curated trajectories. GIF-MCTS surpasses all baselines on the CWMB and two other benchmarks, and we show that the Code World Models synthesized with it can be successfully used for planning, resulting in model-based RL agents with greatly improved sample efficiency and inference speed.

@inproceedings{dainese2024generating, author = {Dainese, Nicola and Merler, Matteo and Alakuijala, Minttu and Marttinen, Pekka}, booktitle = {Advances in Neural Information Processing Systems}, editor = {Globerson, A. and Mackey, L. and Belgrave, D. and Fan, A. and Paquet, U. and Tomczak, J. and Zhang, C.}, pages = {60429--60474}, publisher = {Curran Associates, Inc.}, title = {Generating Code World Models with Large Language Models Guided by Monte Carlo Tree Search}, url = {https://proceedings.neurips.cc/paper_files/paper/2024/hash/6f479ea488e0908ac8b1b37b27fd134c-Abstract-Conference.html}, volume = {37}, year = {2024}, } - In-Context Symbolic Regression: Leveraging Large Language Models for Function DiscoveryIn Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 4: Student Research Workshop), Aug 2024

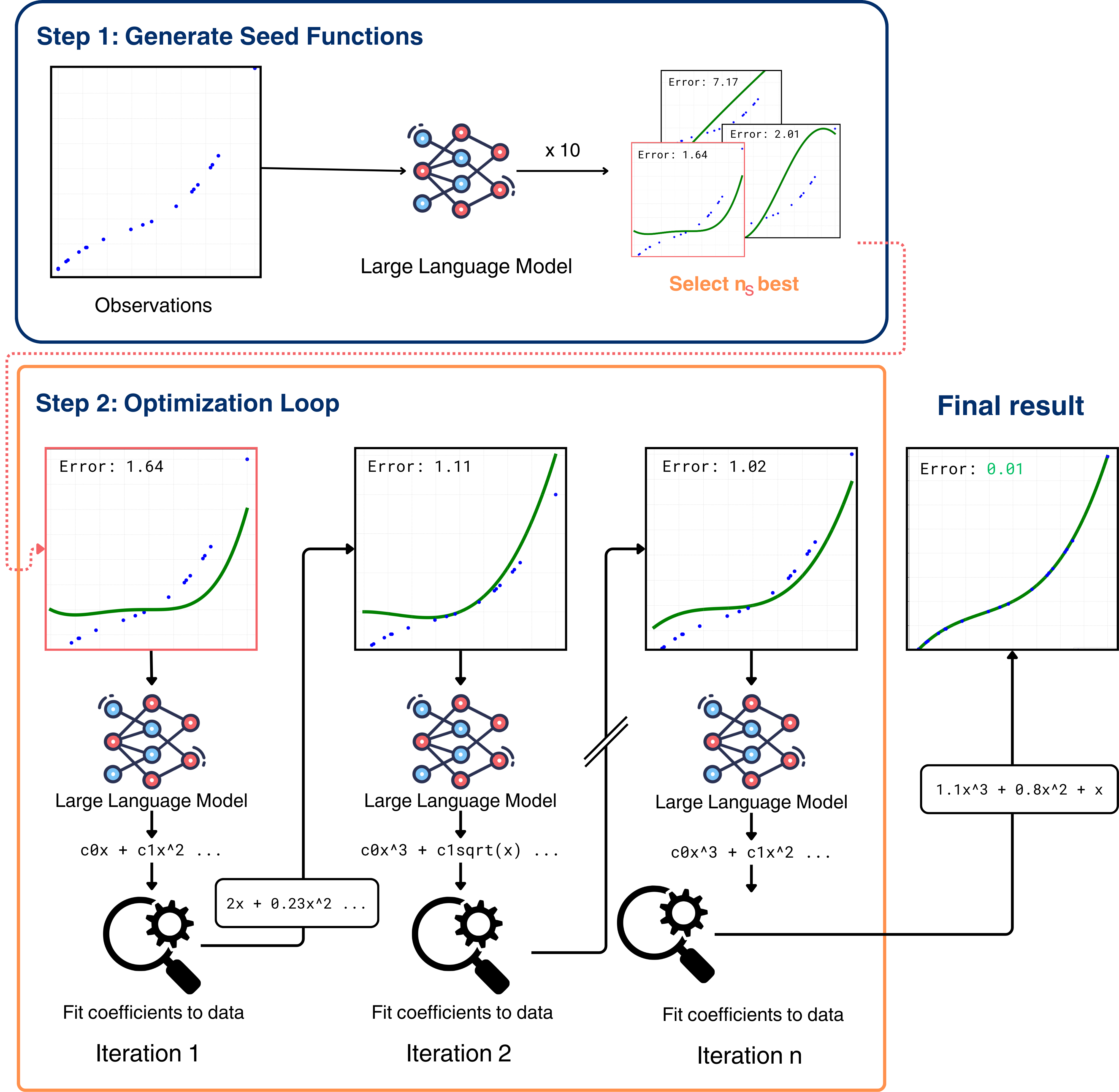

State of the art Symbolic Regression (SR) methods currently build specialized models, while the application of Large Language Models (LLMs) remains largely unexplored. In this work, we introduce the first comprehensive framework that utilizes LLMs for the task of SR.We propose In-Context Symbolic Regression (ICSR), an SR method which iteratively refines a functional form with an LLM and determines its coefficients with an external optimizer. ICSR leverages LLMs’ strong mathematical prior both to propose an initial set of possible functions given the observations and to refine them based on their errors.Our findings reveal that LLMs are able to successfully find symbolic equations that fit the given data, matching or outperforming the overall performance of the best SR baselines on four popular benchmarks, while yielding simpler equations with better out of distribution generalization.

@inproceedings{merler2024incontext, title = {In-Context Symbolic Regression: Leveraging Large Language Models for Function Discovery}, author = {Merler, Matteo and Haitsiukevich, Katsiaryna and Dainese, Nicola and Marttinen, Pekka}, editor = {Fu, Xiyan and Fleisig, Eve}, booktitle = {Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 4: Student Research Workshop)}, month = aug, year = {2024}, address = {Bangkok, Thailand}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.acl-srw.49/}, doi = {10.18653/v1/2024.acl-srw.49}, pages = {427--444}, }

* Denotes equal contribution